Using ChatGPT we often go through a lot of topics, ideas, and questions. I found myself copying and pasting large blobs of text from the chat just to be able to note something for further research. For a long time I wished I could just ask the AI to remember something for me. Nothing fancy, just a simple “Hey, remember that. I want to research that later.”. Today I want to present you with how to add that functionality to your ChatGPT by creating your own GPT and hooking it up to a simple API that you can easily host for free.

This article assumes that you can create your own GPTs. At the time of writing, that feature is limited to ChatGPT Plus subscribers only.

Demonstration

Let’s say that I added the long-term memory functionality to a GPT that helps me with brainstorming on a novel. Let’s check if it has something in it’s long-term memory:

Me: Do you remember any topics we left for future development?

Notice that we gave it some extra context to the query: “…topics we left for future development?”. This could make it ignore some unrelated memories.

Looks like our GPT already has a memory:

GPT: Yes, we have a topic for future development: the concept of genetic mutations in the Immune Humans, which enable them to resist The Entity’s influence. This area requires further exploration, particularly focusing on the of quantum fluctuations in biological processes

Let’s ask it to remember something additional, where we provide a full context:

Me: Please remember that we also have to look more into how the life essence, through dimensional interference, allows for biological processes to exist. I need to remember to expand that section of the knowledge base.

If that was a longer conversation we could simply ask it to remember some pars of it or event to remember the gist of the whole conversation. Our GPT responded with the confirmation:

GPT: I have saved the topic of exploring the role of life essence in biological processes, on the interplay between the Physical and Incorporeal dimensions, to our memory for future development This will help us our understanding of how life essence, influenced by dimensional interference is essential for processes in the novel’s universe.

You can see that when saving the memory the GPT is able to also reference other bits of knowledge available to it and expanded upon the prompt giving it more context. To be more specific, it referenced “Physical and Incorporeal” dimensions, which are store in it’s knowledge base.

Now if we delete the conversation and create a new chat with the same GPT, it should remember the memory we asked for it to save. Let’s check:

Me: Do you remember any topics we left for future development?

GPT: Yes, there are topics we previously discussed for future development:

- Immune Humans: We planned to further research and develop the concept of genetic mutations enabling immunity to The Entity’s influence. This includes focusing on the implications of quantum fluctuations in biological processes.

- Life Essence and Biological Processes: We aimed to expand on the role of life essence in biological processes, emphasizing the interaction between the Physical and Incorporeal dimensions. This was intended to deepen the understanding of how life essence, influenced by dimensional interference, is essential for biological processes in the novel’s universe.

Great! The GPT remembered both memories across different conversations and is able to list them. Now let’s move on to setting it up for your own GPT.

Setup

The long-term memory consists of a few pieces:

- Storage for the memories

- API to interact with the storage

- GPT action that knows how to interact with the API

- Prompt section that instructs the GPT how to use the action

Deploying API on Val Town

For storage and API we can use the wonderful Val Town service. It describes itself as:

If GitHub Gists could run and AWS Lambda were fun. Val Town is a social website to write and deploy TypeScript. Build APIs and schedule functions from your browser.

It’s a great tool where we can write some JavaScript/TypeScript directly in a browser and run it as a serverless function. It’s free, very simple to grasp and fun to tinker with. Each code snippet is referred to as Val. We will use several features of Val Town:

- Ability to reference any NPM packages in our code

- Ability to reference other Vals as if they were NPM packages

- Exposing your Vals as an API endpoints that can be called from anywhere

- Storing data in a free Key-Value blob storage

First we need to create ourselves a new account on Val Town. Once your logged in, you can fork my example Val - this will create a copy of it on your account and allow you to edit it and deploy it. Simply press the Fork button in the top right corner of the Val preview:

This Val imports some other Val that contains the whole memory API: xkonti/gptMemoryManager. It also defines a series of values that we can use to configure our API:

apiName- the name of your API. It will be used in the Privacy Policy (eg.Memory API)contactEmail- the email to provide for contact in the Privacy Policy (eg.[email protected])lastPolicyUpdate- the date the Privacy Policy was last updated (eg.2023-11-28)blobKeyPrefix- the prefix for the blob storage keys used by your API - more info below (eg.gpt:memories:)apiKeyPrefix- the prefix for you API Keys secrets - more info below (eg.GPTMEMORYAPI_KEY_)

Only the contactEmail has to be changed. If you don’t have a need, you can leave the rest as is.

After making changes make sure to:

- Hit the Save button in the top right corner of the Val preview.

- Change the Val visibility to Unlisted next to it’s name - this will make it so that the API is accessible from anywhere, but it’s not listed on your profile page.

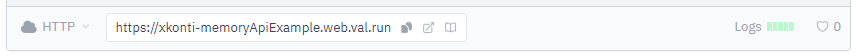

And just like that, you have your own API deployed on Val Town. On the bottom of your Val preview you should see a status bar with HTTP and the address of your API. You can copy the address of your API from the status bar for later use.

API Key for your GPT

The API we just deployed can be used by multiple GPTs, where each GPT has it’s own separate memory (you can make them share as well if you wish so). Each GPT that will access the API needs to have a name and API key.

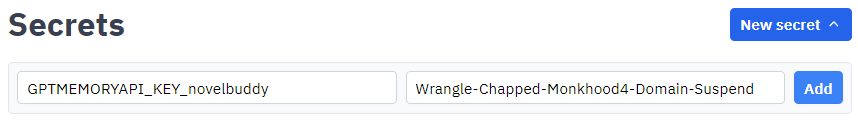

The name needs to be a unique alphanumeric name that will be used to identify that GPT. It will be used to relate the API key with the memory storage. Come up with a name for your GPT, for example novelbuddy.

The API key is a secret that will be used to authenticate the GPT with the API. It can be any alphanumeric string, but it’s recommended to use a long random string. You can use a password generator to generate one. It could be something like Wrangle-Chapped-Monkhood4-Domain-Suspend.

Now that you have your name and API key you need to add them as a secret to you Val account. You can navigate to the Secrets page and click the New secret button. The key of the secret will be the apiKeyPrefix + name of your GPT. For example, if your apiKeyPrefix is GPTMEMORYAPI_KEY_ and your GPT name is novelbuddy, then the key of the secret will be GPTMEMORYAPI_KEY_novelbuddy.

The value of the secret will be the API key you generated for your GPT.

Hit Add button to save the secret. Now we can move on to configuring your GPT.

GPT Action

The API is now fully deployed and configured. Now we can focus on adding the long-term memory functionality to your GPT.

If you don’t have your custom GPT yet, create one. I used this prompt to generate one:

Make a personal knowledge assistant that will assist me with learning processes, help make complex problems more approachable and summarize topics into something easier to remember.

Switch to the Configure tab where we can customize our GPT precisely. On the bottom of the tab we can press the Create new action button to create a new action for our GPT.

The first thing we need is the OpenAPI schema for our API. We can get it from our API by adding /openapi to the end of our API address. For example:

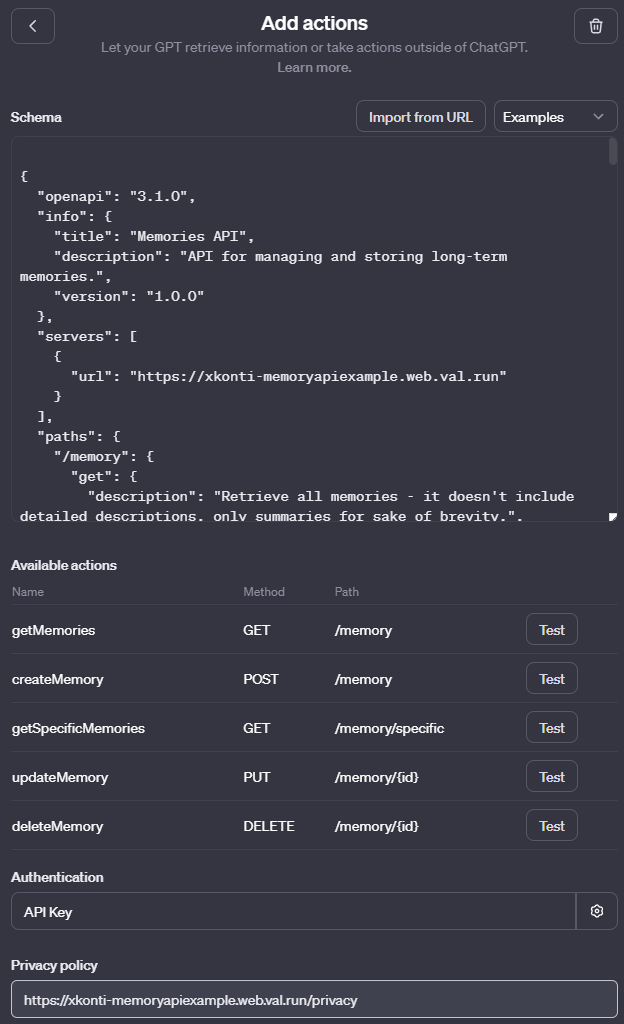

https://xkonti-memoryApiExample.web.val.run/openapiClick the Import from URL button and paste that address into the input field. Then hit the Import button.

Immediately you will see a red error message: Could not find a valid URL in 'servers'

In the OpenAPI schema replace the <APIURL> with the url of your API. Make sure you replace all capital letters with lowercase ones. For example:

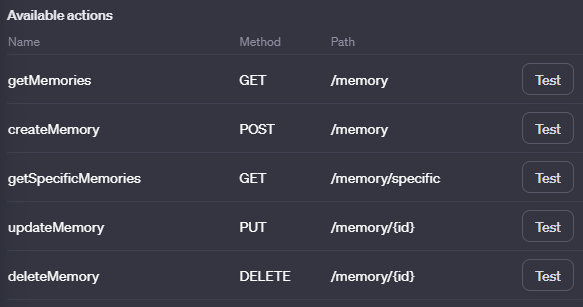

"url": "https://xkonti-memoryapiexample.web.val.run"Now you should see that the schema was imported successfully as there are 5 available actions that loaded below:

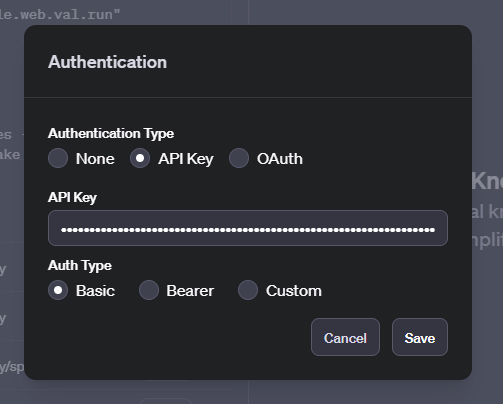

Let’s move on to the next step in the action configuration. The action needs to authenticate with the API, so let’s set it in the Authentication section. Click the None and then select API Key in the popup.

The API Key needs to take shape of <name>:<api-key>. In our example it will be:

novelbuddy:Wrangle-Chapped-Monkhood4-Domain-SuspendBut before pasting it into the API Key field it needs to be base64 encoded first. You can use the base64encode.org to do it quickly. Paste it into the input field and hit Encode. Then copy the encoded string and paste it into the API Key field. His Save to save the authentication configuration.

The last thing to configure is the Privacy Policy. Simply paste your API url and add /privacy at the end. For example: https://xkonti-memoryApiExample.web.val.run/privacy.

Now we have the GPT action fully configured. You can press the < chevron to get back to the GPT configuration.

Prompt

Your GPT should have the long-term memory action at it’s disposal now. Unfortunately it might not be sure how to use it properly yet. Let’s add a prompt section that will instruct the GPT how to use it. Something like this works well in my case:

# Long-term memory

At some point the user might ask you to do something with "memory". Things like "remember", "save to memory", "forget", "update memory", etc. Please use corresponding actions to achieve those tasks. User might also ask you to perform some task with the context of your "memory" - in that case fetch all memories before proceeding with the task. The memories should be formed in a clear and purely informative language, void of unnecessary adjectives or decorative language forms. An exception to that rule might be a case when the language itself is the integral part of information (snippet of writing to remember for later, examples of some specific language forms, quotes, etc.).

Structure of a memory:

- name - a simple name of the memory to give it context at a glance- description - a detailed description of the thing that should be remembered. There is no length limit.- summary - a short summary of the memory. This should be formed in a way that will allow for ease of understanding which memories to retrieve in full detail just by reading the list of summaries. If there are some simple facts that have to be remembered and are the main point of the memory they should be included in the summary. The summary should also be written in a compressed way with all unnecessary words skipped if possible (more like a set of keywords or a Google Search input).- reason - the reason for the remembering - this should give extra information about the situation in which the memory was requested to be saved.

The memory accessed through those actions is a long-term memory persistent between various conversations with the user. You can assume that there already are many memories available for retrieval.

In some situations you might want to save some information to your memory for future recall. Do it in situations where you expect that some important details of the conversation might be lost and should be preserved.

Analogously you can retrieve memories at any point if the task at hand suggests the need or there isn't much information about the subject in your knowledge base.Hit the Save button on the top right corner of the page to save the GPT. Thanks to the privacy policy being set in your action you can choose any publishing option.

⚠️ Keep in mind that any user of your GPT will be able to read/add/remove the same set of memories. Nothing is private here.

Usage

You should now have a fully functional GPT with a long-term memory. What can you do with it?

The prompt we added to the GPT should allow you to use the memory keyword in your prompts to hint the GPT that you want to use the long-term memory. You should be able to:

- Ask for existing memories:

What do you have in your memory? - Ask it to search it’s memory for something specific:

Do you remember the dimensions of my shed? - Ask it to remember something new:

Please remember that I need to buy some milk. - Ask it to remember something from the ongoing conversation:

Remember that. Make sure to note cost estimates we discussed. - Ask it to forget something:

Forget that I need to buy some milk. - Ask it to forget a memory you just talked about:

Delete this memory, please. - Ask it to forges a set of memories:

Forget all memories related taxes. - Identify questions by it’s id:

Please show me what "summary" you would generate for the memory "3a770850-849f-11ee-a182-4f0453a7b1ee" if you were saving it as a new memory.

How it works

The long-term memory API is a surprisingly straight forward concept. It simply allows to manipulate a list of memories. There is nothing else to it.

Tech stack

The API is running on Val Town which uses Deno runtime. The API is written in TypeScript and uses the Hono web framework to handle HTTP requests. The memories are stored using the Blob storage that Val Town provides.

Storage

The memories are sored in a Blob storage that Val Town provides. All memories related to a specific GPT (name) are stored as a single JSON under the key <blogKeyPrefix><name>. In case of this example it would be gpt:memories:novelbuddy.

Each memory object contains:

id- an unique identifier of the memory (UUID v4) - this is used to identify the memory.name- a simple name of the memory to give it context at a glance.description- a detailed description of the thing that should be remembered.summary- a short summary of the memory - this is used by ChatGPT to quickly search a list of memories.reason- the reason for the remembering - this should give extra information about the situation in which the memory was requested to be saved.

As an example consider the following Val code that displays the memories stored from the demonstration at the beginning of this post:

import { blob } from "https://esm.town/v/std/blob";export const showMemories = (async () => { return await blob.getJSON("gpt:memories:echo");})();It returns the following JSON stored in the blob:

[ { "id": "3a770850-849f-11ee-a182-4f0453a7b1ee", "name": "Immune Humans' Mutations", "description": "In the novel 'Abandoned Earth', a subset of humans develop genetic mutations that grant them immunity to The Entity's influence. This mutation seems to be a result of quantum fluctuations affecting biological processes, leading to unique genetic variations. These mutations confer not just resistance to The Entity's manipulation but also could possibly endow these humans with unique abilities or traits that differ from the general population. The nature, extent, and implications of these mutations are a significant aspect of the novel, impacting the plot and the interactions between characters, particularly in the context of the resistance against The Entity.", "summary": "Subset of humans in 'Abandoned Earth' develop mutations granting immunity to The Entity's influence, possibly due to quantum fluctuations in biology. These mutations may give unique abilities and significantly impact plot and character dynamics, especially in resistance against The Entity.", "reason": "To further research and develop the concept of genetic mutations enabling immunity to The Entity's influence, particularly focusing on the implications of quantum fluctuations in biological processes." }, { "id": "a2a48eb0-8e2d-11ee-896d-175ca65d3a1a", "name": "Life Essence and Biological Processes", "description": "Explore the role of life essence in enabling biological processes, with a focus on how dimensional interference, particularly from the Incorporeal dimension, facilitates the existence of these processes. This exploration should delve into the complex interplay between the Physical and Incorporeal dimensions, examining how life essence acts as a crucial element in the emergence and sustenance of life in the Physical dimension.", "summary": "Expanding on the role of life essence in biological processes, emphasizing the interaction between the Physical and Incorporeal dimensions.", "reason": "To deepen the understanding of how life essence, influenced by dimensional interference, is essential for biological processes in the novel's universe." }]Endpoints

The memories can be manipulated using 5 endpoints:

GET /memory- this endpoint returns a list of all memories. Those memories have thesummaryfield but lackdescriptionfield. This is to reduce the size of the returned JSON so that GPT can quickly search through the list of memories without confusing it too much with excessive details.GET /memory/specific?ids=<id1,id2,id3...>- this endpoint returns a list of memories with the full details. It accepts a list of memory ids to retrieve. This is used when the GPT wants to retrieve a specific memory / memories. It’s possible for the GPT to first retrieve the list of memories and then request the specific ones in a single prompt.POST /memory- this endpoint allows to add a new memory. It accepts a JSON object with the memory details. It returns theidof the newly created memory. The GPT can utilize the returnedidto reference the memory in the future.PUT /memory/:id- this endpoint allows to update an existing memory. It needs theidto be specified. If there is no memory with the specifiedidit will return create na new memory instead.DELETE /memory/:id- this endpoint allows to delete an existing memory. It needs theidto be specified. If there is no memory with the specifiedidit will return a 404 error.

Additionally there are 2 more utility endpoints:

GET /privacy- this endpoint returns a simple privacy policy for the API.GET /openapi- this endpoint returns the OpenAPI schema for the API. It currently isn’t aware of the url it’s deployed on, so it will return the schema with<APIURL>placeholder. You need to replace it with the url of your API.

Privacy policy

The privacy policy is a very simple policy generated by ChatGPT. It’s generated by a separate Val:

You can easily fork it and point your API to that one instead.

Conclusion

I hope you found this guide useful. This approach is very simplistic, yet it can be very powerful. There’s a lot of room for improvement even by just changing up the prompt. Using IDs to reference memories from other memories? Providing an endpoint with more detailed description on how to use the long-term memory action to save some space in the prompt? Using a more complex storage to allow for more data to be stored and queried in a more interesting way? The possibilities are endless. Consider it a good starting point for your own experiments.